Interview points

Runnable vs Thread

| Runnable | Thread |

|---|---|

| interface | class |

The most common difference is when you extend Thread class it means you can’t extend any other class which you required after that. But if you implement Runnable it’s okay to inherit one class. The “more important” difference is that by extending Thread, each of your threads has a unique object associated with it, whereas implementing Runnable, many threads will share the same object instance.

// Reference from stackoverflow

// Implement Runnable Interface...

class ImplementsRunnable implements Runnable {

private int counter = 0;

public void run(){

counter++;

System.out.println("ImplementsRunnable : Counter : " + counter);

}

}

//Extend Thread class...

class ExtendsThread extends Thread {

private int counter = 0;

public void run() {

counter++;

System.out.println("ExtendsThread : Counter : " + counter);

}

}

//Use the above classes here in main to understand the differences more clearly...

public class ThreadVsRunnable {

public static void main(String args[]) throws Exception {

// Multiple threads share the same object.

ImplementsRunnable rc = new ImplementsRunnable();

Thread t1 = new Thread(rc);

t1.start();

Thread.sleep(1000); // Waiting for 1 second before starting next thread

Thread t2 = new Thread(rc);

t2.start();

Thread.sleep(1000); // Waiting for 1 second before starting next thread

Thread t3 = new Thread(rc);

t3.start();

// Creating new instance for every thread access.

ExtendsThread tc1 = new ExtendsThread();

tc1.start();

Thread.sleep(1000); // Waiting for 1 second before starting next thread

ExtendsThread tc2 = new ExtendsThread();

tc2.start();

Thread.sleep(1000); // Waiting for 1 second before starting next thread

ExtendsThread tc3 = new ExtendsThread();

tc3.start();

}

}

Output of the above program:

ImplementsRunnable : Counter : 1

ImplementsRunnable : Counter : 2

ImplementsRunnable : Counter : 3

ExtendsThread : Counter : 1

ExtendsThread : Counter : 1

ExtendsThread : Counter : 1

https & http

| HTTP | HTTPS |

|---|---|

| default port 80 | default port 443 |

| URL begins with "http", unsecure | URL begins with "https", secure |

| default port 80 | default port 443 |

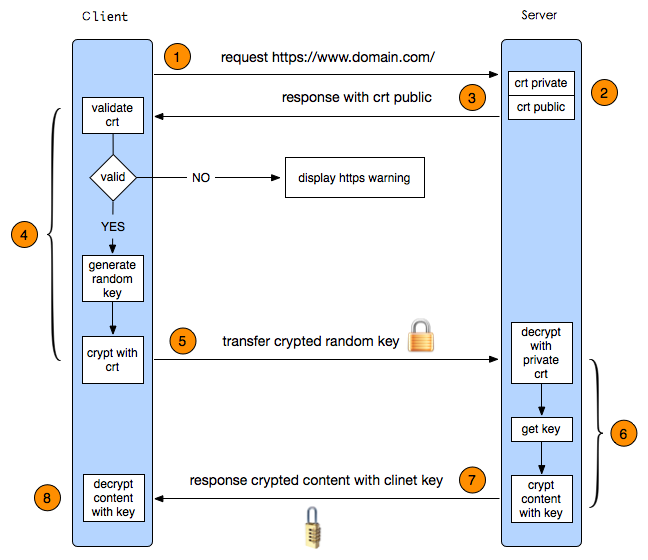

https = http + cryptographic protocols. To achieve this security in https, Public Key Infrastructure is used because public keys are used by Browsers while private keys can be used in servers. The distribution of these public keys is done via Certificates which are maintained by the Browser.

https process

- firstly, the client will send a http request to the server at 443.

- //

- in the server, after receiving the request, it will response with public certificate back to the client.

- the client will verify the certificate, such as the expire date and if it’s valid. If ok, the client will generate a random key and then encrypt the key with the public certificate just received. (The random key is used to communicate with the server.)

- The client send the encrypted key to the server.

- The server will decrypt the key with certificate to get the real random key. And then everytime when the server talks to the client, the content will be encrypted with this key.

- 8.

Why use the encrypted key during communications between server and client?

I think the important reason is that the generated random key is Symmetric encryption, whihc is way faster than asymmetric ways and is very efficient.

7-layer OSI model

- Physical layer

- Data link layer

- Network layer

- Transport layer

- Session layer

- Presentation layer

- Application layer

visit www.google.com

- DNS

1.1 check browser cache 1.2 check OS cache 1.3 check Router cache 1.4 the resolver asks the `root server` and they answer with the `TLD server` authoritative for `com` // Top Layer Domain server // Over 1000+ top layer domains 1.5 the resolver asks the `TLD server` and they answer with the `name server` configured for 'google.com' 1.6 the resolver asks the `name server` and they response the ip / `A record`. - TCP 3-way handshake

- HTTP Request - Client

- HTTP Response - server

- Render the html page

Process & Thread

| Process | Thread |

|---|---|

| an executing program, has several threads | single thread |

| own seperate address space | share address space with other threads in a process |

| switching and communication is more expensive than in threads | easier |

ArrayList & LinkedList

| ArrayList | LinkedList |

|---|---|

| dynamic array | doubly linked list |

| fixed size | non-fixed size |

| insertion and removal are O(n) | insertion and removal are O(1) |

Hashtable & Hashmap

| Hashtable | Hashmap |

|---|---|

| thread safe | non-thread safe |

| not allowed null key values | allowed null key and values |

Hashtable & ConcurrentHashmap

| Hashtable | ConcurrentHashmap |

|---|---|

| lock on the time | lock only when update including put, deleting and so on |

| one lock on all elements | multilocks |

| store data in only one bucket | multi-buckets |

Override & Overload

| Override | Overload |

|---|---|

| it happens between classes, parent class and child class | it happens in the same class |

| return type must be same | return type don't need to be same |

| parameters must be same | parameters must be different |

Mutex & Lock & Semaphore & Monitor

Mutex: it also can be used as a lock. But it's system wide and can be shared with other processes.

Lock: it only allows one thread to acquire this data or enter the critical section and this lock isn't shared with other processes.

Semaphore: it does the same thing as the Mutex but allows x number of threads to enter the critical section.

Monitor: it's a mechanism used to control concurrent access to an object. It can be seen as a building with a special room and a waiting room and the special room can be occupied by only one thread at a time and the waiting room is used to hold on left threads.

Deadlock

Deadlock is a state in which each member of a group is waiting for another member. In another word, a set of threads holding some resources and waiting to acquire a resource held by another thread.

-

Circular wait in this method, all resources are labeled with number. and it’s required for threads to acquire resources in the order they are sorted.

-

Preemption we can take a resource from one thread and give it to other. In this way it can resolve the deadlock situation.

Starvation

It happens low priority threads/processes get blocked and high priority threads/processes keep executing. And deadlock is the ultimate form of starvation.

Load Balancing Algorithm

| Round Robin | Weighted Round Robin | Least Connection |

|---|---|---|

| it's the simplest algorithm and easy to implement and understand. It assumes that all servers have same capacity because this algorithm will distribute client requests to servers in turn. | it allows to assign more requests to each server based on some criteria such as traffic-handling capacity. | it sends requests to servers with the fewest active connections, which can minimize chances of sever overload. |